|

Google Professional Cloud Architect Exam Page 3(Dumps)

Question No:-21

|

Your company's user-feedback portal comprises a standard LAMP stack replicated across two zones. It is deployed in the us-central1 region and uses autoscaled managed instance groups on all layers, except the database. Currently, only a small group of select customers have access to the portal. The portal meets a 99,99% availability SLA under these conditions. However next quarter, your company will be making the portal available to all users, including unauthenticated users. You need to develop a resiliency testing strategy to ensure the system maintains the SLA once they introduce additional user load.

What should you do?

1. Capture existing users input, and replay captured user load until autoscale is triggered on all layers. At the same time, terminate all resources in one of the zones

2. Create synthetic random user input, replay synthetic load until autoscale logic is triggered on at least one layer, and introduce ג€chaosג€ to the system by terminating random resources on both zones

3. Expose the new system to a larger group of users, and increase group size each day until autoscale logic is triggered on all layers. At the same time, terminate random resources on both zones

4. Capture existing users input, and replay captured user load until resource utilization crosses 80%. Also, derive estimated number of users based on existing user's usage of the app, and deploy enough resources to handle 200% of expected load

|

Answer:-2. Create synthetic random user input, replay synthetic load until autoscale logic is triggered on at least one layer, and introduce ג€chaosג€ to the system by terminating random resources on both zones

|

|

Question No:-22

|

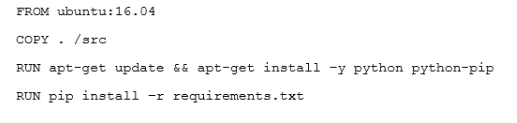

One of the developers on your team deployed their application in Google Container Engine with the Dockerfile below. They report that their application deployments are taking too long.

You want to optimize this Dockerfile for faster deployment times without adversely affecting the app's functionality.

Which two actions should you take? (Choose two.)

1. Remove Python after running pip

2. Remove dependencies from requirements.txt

3. Use a slimmed-down base image like Alpine Linux

4. Use larger machine types for your Google Container Engine node pools

5. Copy the source after he package dependencies (Python and pip) are installed

Answer:-3 and 5

Hint:-

The speed of deployment can be changed by limiting the size of the uploaded app, limiting the complexity of the build necessary in the Dockerfile, if present, and by ensuring a fast and reliable internet connection.

Note: Alpine Linux is built around musl libc and busybox. This makes it smaller and more resource efficient than traditional GNU/Linux distributions. A container requires no more than 8 MB and a minimal installation to disk requires around 130 MB of storage. Not only do you get a fully-fledged Linux environment but a large selection of packages from the repository.

Reference:-https://groups.google.com/forum/#!topic/google-appengine/hZMEkmmObDU

Reference:-https://www.alpinelinux.org/about/

|

|

Question No:-23

|

Your solution is producing performance bugs in production that you did not see in staging and test environments. You want to adjust your test and deployment procedures to avoid this problem in the future.

What should you do?

1. Deploy fewer changes to production

2. Deploy smaller changes to production

3. Increase the load on your test and staging environments

4. Deploy changes to a small subset of users before rolling out to production

|

Answer:-4. Deploy changes to a small subset of users before rolling out to production

|

|

Question No:-24

|

A small number of API requests to your microservices-based application take a very long time. You know that each request to the API can traverse many services.

You want to know which service takes the longest in those cases.

What should you do?

1. Set timeouts on your application so that you can fail requests faster

2. Send custom metrics for each of your requests to Stackdriver Monitoring

3. Use Stackdriver Monitoring to look for insights that show when your API latencies are high

4. Instrument your application with Stackdriver Trace in order to break down the request latencies at each microservice

|

Question No:-25

|

During a high traffic portion of the day, one of your relational databases crashes, but the replica is never promoted to a master. You want to avoid this in the future.

What should you do?

1. Use a different database

2. Choose larger instances for your database

3. Create snapshots of your database more regularly

4. Implement routinely scheduled failovers of your databases

|

Answer:-4. Implement routinely scheduled failovers of your databases

|

|

Question No:-26

|

Your organization requires that metrics from all applications be retained for 5 years for future analysis in possible legal proceedings.

Which approach should you use?

1. Grant the security team access to the logs in each Project

2. Configure Stackdriver Monitoring for all Projects, and export to BigQuery

3. Configure Stackdriver Monitoring for all Projects with the default retention policies

4. Configure Stackdriver Monitoring for all Projects, and export to Google Cloud Storage

|

Answer:-2. Configure Stackdriver Monitoring for all Projects, and export to BigQuery

Note:-

Stackdriver Logging provides you with the ability to filter, search, and view logs from your cloud and open source application services. Allows you to define metrics based on log contents that are incorporated into dashboards and alerts. Enables you to export logs to BigQuery, Google Cloud Storage, and Pub/Sub.

|

|

Question No:-27

|

Your company has decided to build a backup replica of their on-premises user authentication PostgreSQL database on Google Cloud Platform. The database is 4 TB, and large updates are frequent. Replication requires private address space communication.

Which networking approach should you use?

1. Google Cloud Dedicated Interconnect

2. Google Cloud VPN connected to the data center network

3. A NAT and TLS translation gateway installed on-premises

4. A Google Compute Engine instance with a VPN server installed connected to the data center network

Answer:-1. Google Cloud Dedicated Interconnect

Note:-

Google Cloud Dedicated Interconnect provides direct physical connections and RFC 1918 communication between your on-premises network and Google's network. Dedicated Interconnect enables you to transfer large amounts of data between networks, which can be more cost effective than purchasing additional bandwidth over the public Internet or using VPN tunnels.

Benefits:

- Traffic between your on-premises network and your VPC network doesn't traverse the public Internet. Traffic traverses a dedicated connection with fewer hops, meaning there are less points of failure where traffic might get dropped or disrupted.

- Your VPC network's internal (RFC 1918) IP addresses are directly accessible from your on-premises network. You don't need to use a NAT device or VPN tunnel to reach internal IP addresses. Currently, you can only reach internal IP addresses over a dedicated connection. To reach Google external IP addresses, you must use a separate connection.

- You can scale your connection to Google based on your needs. Connection capacity is delivered over one or more 10 Gbps Ethernet connections, with a maximum of eight connections (80 Gbps total per interconnect).

- The cost of egress traffic from your VPC network to your on-premises network is reduced. A dedicated connection is generally the least expensive method if you have a high-volume of traffic to and from Google's network.

Reference:-https://cloud.google.com/interconnect/docs/details/dedicated

|

|

Question No:-28

|

Auditors visit your teams every 12 months and ask to review all the Google Cloud Identity and Access Management (Cloud IAM) policy changes in the previous 12 months. You want to streamline and expedite the analysis and audit process.

What should you do?

1. Create custom Google Stackdriver alerts and send them to the auditor

2. Enable Logging export to Google BigQuery and use ACLs and views to scope the data shared with the auditor

3. Use cloud functions to transfer log entries to Google Cloud SQL and use ACLs and views to limit an auditor's view

4. Enable Google Cloud Storage (GCS) log export to audit logs into a GCS bucket and delegate access to the bucket

|

Answer:-4. Enable Google Cloud Storage (GCS) log export to audit logs into a GCS bucket and delegate access to the bucket

|

|

Question No:-29

|

You are designing a large distributed application with 30 microservices. Each of your distributed microservices needs to connect to a database back-end. You want to store the credentials securely.

Where should you store the credentials?

1. In the source code

2. In an environment variable

3. In a secret management system

4. In a config file that has restricted access through ACLs

|

Question No:-30

|

A lead engineer wrote a custom tool that deploys virtual machines in the legacy data center. He wants to migrate the custom tool to the new cloud environment.

You want to advocate for the adoption of Google Cloud Deployment Manager.

What are two business risks of migrating to Cloud Deployment Manager?

(Choose two.)

1. Cloud Deployment Manager uses Python

2. Cloud Deployment Manager APIs could be deprecated in the future

3. Cloud Deployment Manager is unfamiliar to the company's engineers

4. Cloud Deployment Manager requires a Google APIs service account to run

5. Cloud Deployment Manager can be used to permanently delete cloud resources

6. Cloud Deployment Manager only supports automation of Google Cloud resources

|

|