| |

|

Apache Flume Architecture with details

Question Posted on 10 Nov 2022

Home >> Tools >> Apache Flume >> Apache Flume Architecture with details |

Apache Flume Architecture with details

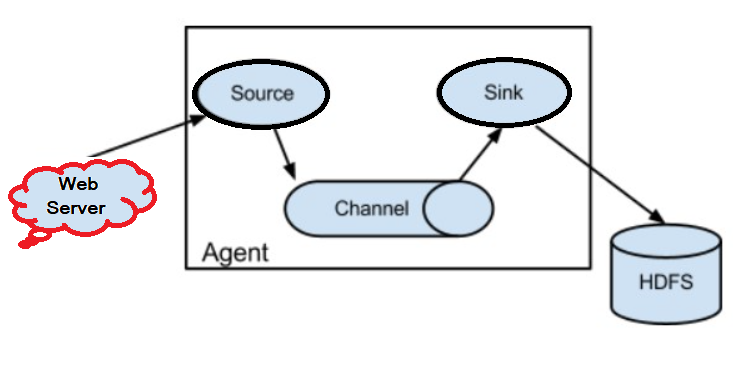

Flume Architecture consists of many elements:-

(1)Flume Source

(2)Flume Channel

(3)Flume Sink

(4)Flume Agent

(5)Flume Event

(1)Flume Source:-When we say about the Flume Source it can be found on data producers like Twitter and Facebook. And here source gathers data from generator and sends it to the Flume Channel in the form of Flume Events.

And Flume supports a variety of sources which include Avro Flume Source, which connects to an Avro port and gets events from an Avro external client, and Thrift Flume Source, which connects to a Thrift port and receives events from Thrift client streams, Spooling Directory Source, and Kafka Flume Source.

(2)Flume Channel:-When we say about Flume Channel it is a transitory storage that receives events from the source and buffers them until sinks consume them. And this serves as a link between sinks and authorities. And these channels are entirely transactional and can connect to an unlimited number of sources and sinks.

(3)Flume Sink:-When we say about Flume Sink is a data repositories such as HDFS and HBase include a Flume Sink. The Flume sink consumes events from Channel and saves them in HDFS or other destination storage. There is no need for a sink to give possibilities to Store; alternatively, we may set it up so that it can deliver events to another agent. Flume works with various sinks, including HDFS Sink, Hive Sink, Thrift Sink, and Avro Sink.

(4)Flume Agent:-When we say about Flume Agent an agent is a daemon process that runs independently. It accepts data (events) from customers or other agents and routes it to the appropriate destination (sink or agent). Flume may contain many agents.

(5)Flume Event:-When we say about the Flume Event it is the most straightforward unit of data transferred in Flume. It has a byte array payload that must be delivered from the source to the destination and optional headers.

Data Flow

Flume is a platform for transferring log data into HDFS. Usually, the log server creates events and log data, and these servers have Flume agents running on them. The data generators provide the data to these agents. | |

|

|

|

|